Thursday, October 11, 2007

Programming Collective Intelligence - Viewing Data in Two Dimensions

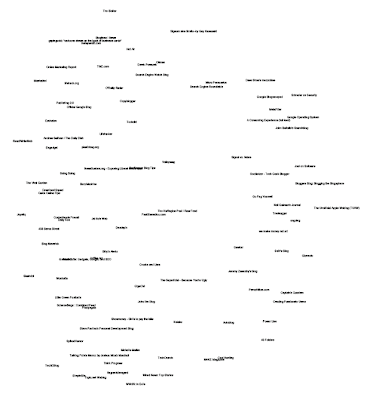

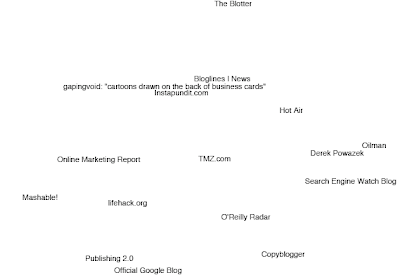

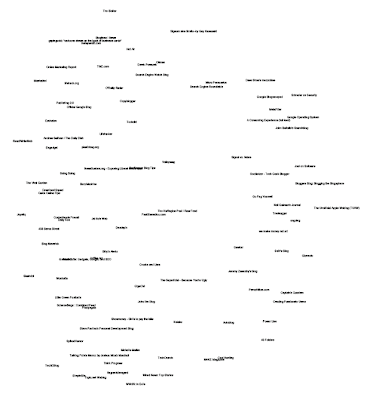

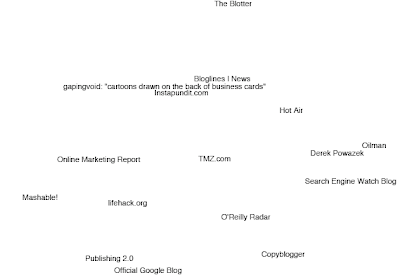

An example of taking data in multiple dimensions and "scaling" it to two dimensions, where the distances between data points is proportional to the multi-dimensional distance. Again, this one is basically an imperative rewrite. I hope to be able to exploit more of Lisp's power in the next chapter, which includes some neural networks. The big revelation from this, if anyone says Lisp is unreadable, I'd show them

I replaced clusters.lisp, new code is in it.

Zooming in,

fakedist[i][j] = sqrt(sum([pow(loc[i][x] - loc[j][x], 2)

for x in range(len(loc[i]))]))

I replaced clusters.lisp, new code is in it.

Zooming in,

Comments:

<< Home

Thanks for the new instalment.

A few comments:

The line after "; Move points in proportion to error" seems wrong. Should be incf not setf, otherwise you'll only consider the last element for moving in x-direction.

Isn't it quite wasteful to always allocate a new grad array? Just resetting it to 0 in each round would be enough and not cons quite so much. (I was thinking of fill but that only works on plain sequences, so one would have to recast it to one "(fill (make-array (* n 2) :displaced-to grad) 0.0))" -- not the prettiest solution maybe)

Not sure how much data you have, but the locality of access isn't as good as good be. E.g. why calculate the whole of fakedist beforehand if you only always need one element which you could calculate then, when needed?

And another thing I was wondering (I don't have the book yet -- we have a postal strike here -- so maybe this is explained in the book): How do you guarantee that the elements of loc remain in the range [0;1]? If they drift outside your display routine will draw a few items outside the page, or not?

A few comments:

The line after "; Move points in proportion to error" seems wrong. Should be incf not setf, otherwise you'll only consider the last element for moving in x-direction.

Isn't it quite wasteful to always allocate a new grad array? Just resetting it to 0 in each round would be enough and not cons quite so much. (I was thinking of fill but that only works on plain sequences, so one would have to recast it to one "(fill (make-array (* n 2) :displaced-to grad) 0.0))" -- not the prettiest solution maybe)

Not sure how much data you have, but the locality of access isn't as good as good be. E.g. why calculate the whole of fakedist beforehand if you only always need one element which you could calculate then, when needed?

And another thing I was wondering (I don't have the book yet -- we have a postal strike here -- so maybe this is explained in the book): How do you guarantee that the elements of loc remain in the range [0;1]? If they drift outside your display routine will draw a few items outside the page, or not?

Cool Lisp trick of the day for ya: #'(lambda (x) x) is #'identity. But in this case, it looks like you're just using it to convert a list into a vector, so #'vector would be even simpler: (apply #'vector ...)

:-)

:-)

You are correct about the setf vs. incf. Thanks.

I'm not sure what you mean about the locality of fakedist. All the values of fakedist (except where (eq k j)) are used in the

(dotimes (k n)

(dotimes (j n

loop.

I'm not sure what you mean about the locality of fakedist. All the values of fakedist (except where (eq k j)) are used in the

(dotimes (k n)

(dotimes (j n

loop.

Sorry about being terse about the locality.

What I meant is that you loop over all n times n elements to calculate the fakedist. And then you go over the same list again to calculate the error. fakedist[i, j] is accessed only when calculating error[i,j]. So why not calculate the value within the loop where it is needed. I see three advantages:

a) the code is clearer -- it is obvious that the fakedist is only needed there

b) memory access locality is more local, i.e. you only iterate once -- if you have a lot of data, you have half the cache misses, and also values from loc might still be in registers giving the compiler more chances at optimising the calculations

c) doing all the calculations for an element in one place makes it easier to parallelise the calculation. I had hoped to work on this this weekend but spent exactly 0 seconds on the computer thanks to familiy activities.

Post a Comment

What I meant is that you loop over all n times n elements to calculate the fakedist. And then you go over the same list again to calculate the error. fakedist[i, j] is accessed only when calculating error[i,j]. So why not calculate the value within the loop where it is needed. I see three advantages:

a) the code is clearer -- it is obvious that the fakedist is only needed there

b) memory access locality is more local, i.e. you only iterate once -- if you have a lot of data, you have half the cache misses, and also values from loc might still be in registers giving the compiler more chances at optimising the calculations

c) doing all the calculations for an element in one place makes it easier to parallelise the calculation. I had hoped to work on this this weekend but spent exactly 0 seconds on the computer thanks to familiy activities.

<< Home